I am making good progress on visualizing creel report data. Flask was pretty easy to install. I played with it in a Linux VM as well as locally. So far it is using HTTP, and I’ll want to upgrade to HTTPS after I deploy in AWS.

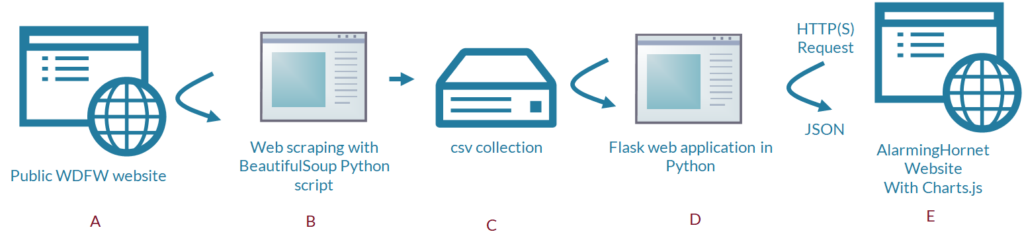

The design of the application so far looks like this.

The web scraping code went through eight major iterations (I always seem to have an existential crises when deciding on version numbers). This one has some major functions:

- update_USE_CACHE() #decides when to actually grab WDFW data older than 60 days. Most of the time it doesn’t, as it is unlikely to change like new data. I also don’t want to access the WDFW website too much. It is public data but grabbing it all the time seems off.

- add_save_tag() #creates a separate text file with details about the last time data was accessed, saved, etc.

- extract_fish_reports(results,creel_table) #does the heavy lifting for processing the HTML from the WDFW web page

- add_fish_from_csv_url(url,dataname,creel_table) #adds the data for previous years, either from WDFW or the cache, depending on update_USE_CACHE().

- extract_ALL_fish_reports(creel_table) #organizes the web scraping and runs it through extract_fish_reports(results,creel_table)

import requests

import bs4

import csv

from datetime import datetime

import time

import urllib.request

from datetime import date

from bs4 import BeautifulSoup

USE_CACHE = False

last_grab_of_archived_years = ”

creel_table = []

creel_table.append([‘Date’,’Ramp/Site’,’Catch area’,’# Interviews’,’Anglers’,’Chinook (per angler)’,’Chinook’,’Coho’,’Chum’,’Pink’,’Sockeye’,’Lingcod’,’Halibut’])

def update_USE_CACHE():

do=’nothing’

global USE_CACHE

global last_grab_of_archived_years

f = open(“creel_import_log.txt”, “r”)

for x in f:

print(x)

if(x.find(‘last_grab_of_archived_years’)>-1):

last_grab_of_archived_years = x[28:len(x)-1]

print(‘last_grab_of_archived_years’+str(last_grab_of_archived_years))

if(last_grab_of_archived_years != ”):

old_archive_date_dt = datetime.strptime(last_grab_of_archived_years, ‘%m/%d/%Y %I:%M %p’)

now = datetime.now()

elapsedTime = now – old_archive_date_dt

difference_in_days = elapsedTime.days

print(“difference_in_days”+str(difference_in_days))

if(difference_in_days > 9):

USE_CACHE = False

else:

USE_CACHE = True

print(‘USE_CHACHE’+str(USE_CACHE))

def add_save_tag():

#print(‘last_grab_of_archived_years’+last_grab_of_archived_years)

text_file = open(“creel_import_log.txt”, “w”)

now = datetime.now()

date_time = now.strftime(“%m/%d/%Y %I:%M %p”)

#today = date.today()

today_str = date_time#today.strftime(“%m/%d/%Y”)

text_file.write(“last_ran:”+today_str+’\n’)

text_file.write(“last_grab_of_archived_years:”+last_grab_of_archived_years+’\n’)

text_file.close()

def remove_commas(string):

i = 0

new_string = ”

while(i<len(string)):

if(string[i] != ‘,’):

new_string=new_string+string[i]

i=i+1

return(new_string)

def extract_fish_reports(results,creel_table):

run = True

while(run == True):

results = results[results.find(‘<caption>’)+20:len(results)]

date = results[0:results.find(‘</caption>’)-13]

####print(date)

results = results[results.find(‘<td class=”views-field views-field-location-name” headers=”view-location-name-table-column’):len(results)]

results = results[results.find(‘”>’)+2:len(results)]

ramp = results[0:results.find(‘</td>’)]

i = len(ramp) – 1

while(i>0):#remove ending spaces

if(ramp[i] == ‘ ‘):

i=i-1

else:

ramp = ramp[0:i+1]

i = 0

####print(ramp)

results = results[results.find(‘<td class=”views-field views-field-catch-area-name” headers=”view-catch-area-name-table-column’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

catch_area = results[0:results.find(‘</td>’)]

i = len(catch_area) – 1

while(i>0):#remove ending spaces

if(catch_area[i] == ‘ ‘):

i=i-1

else:

catch_area = catch_area[0:i+1]

i = 0

####print(catch_area)

results = results[results.find(‘<td class=”views-field views-field-boats views-align-right” headers=”view-boats’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

interviews = results[0:results.find(‘</td>’)]

i = len(interviews) – 1

while(i>0):#remove ending spaces

if(interviews[i] == ‘ ‘):

i=i-1

else:

interviews = interviews[0:i+1]

i = 0

####print(interviews)

results = results[results.find(‘<td class=”views-field views-field-anglers views-align-right” headers=”view-anglers-table-column’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

anglers = results[0:results.find(‘</td>’)]

i = len(anglers) – 1

while(i>0):#remove ending spaces

if(anglers[i] == ‘ ‘):

i=i-1

else:

anglers = anglers[0:i+1]

i = 0

####print(anglers)

results = results[results.find(‘<td class=”views-field views-field-chinook-effort views-align-right” headers=”view-chinook’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

chinook_pa = results[0:results.find(‘</td>’)]

i = len(chinook_pa) – 1

while(i>0):#remove ending spaces

if(chinook_pa[i] == ‘ ‘):

i=i-1

else:

chinook_pa = chinook_pa[0:i+1]

i = 0

####print(chinook_pa)

results = results[results.find(‘<td class=”views-field views-field-chinook views-align-right” headers=”view-chinook-table-column-‘)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

chinook = results[0:results.find(‘</td>’)]

i = len(chinook) – 1

while(i>0):#remove ending spaces

if(chinook[i] == ‘ ‘):

i=i-1

else:

chinook = chinook[0:i+1]

i = 0

####print(chinook)

results = results[results.find(‘<td class=”views-field views-field-coho views-align-right” headers=”view-coho-table-col’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

coho = results[0:results.find(‘</td>’)]

i = len(coho) – 1

while(i>0):#remove ending spaces

if(coho[i] == ‘ ‘):

i=i-1

else:

coho = coho[0:i+1]

i = 0

####print(coho)

results = results[results.find(‘<td class=”views-field views-field-chum views-align-right” headers=”view-chum-table-column’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

chum = results[0:results.find(‘</td>’)]

i = len(chum) – 1

while(i>0):#remove ending spaces

if(chum[i] == ‘ ‘):

i=i-1

else:

chum = chum[0:i+1]

i = 0

####print(chum)

results = results[results.find(‘<td class=”views-field views-field-pink views-align-right” headers=”view-pink-table-column-‘)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

pink = results[0:results.find(‘</td>’)]

i = len(pink) – 1

while(i>0):#remove ending spaces

if(pink[i] == ‘ ‘):

i=i-1

else:

pink = pink[0:i+1]

i = 0

####print(pink)

results = results[results.find(‘<td class=”views-field views-field-sockeye views-align-right” headers=”view-sockeye-table-col’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

sockeye = results[0:results.find(‘</td>’)]

i = len(sockeye) – 1

while(i>0):#remove ending spaces

if(sockeye[i] == ‘ ‘):

i=i-1

else:

sockeye = sockeye[0:i+1]

i = 0

####print(sockeye)

results = results[results.find(‘<td class=”views-field views-field-lingcod views-align-right” headers=”view-lin’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

lingcod = results[0:results.find(‘</td>’)]

i = len(lingcod) – 1

while(i>0):#remove ending spaces

if(lingcod[i] == ‘ ‘):

i=i-1

else:

lingcod = lingcod[0:i+1]

i = 0

####print(lingcod)

results = results[results.find(‘<td class=”views-field views-field-halibut views-align-right” headers=”view-halibut-ta’)+90:len(results)]

results = results[results.find(‘”>’)+2:len(results)]

halibut = results[0:results.find(‘</td>’)]

i = len(halibut) – 1

while(i>0):#remove ending spaces

if(halibut[i] == ‘ ‘):

i=i-1

else:

halibut = halibut[0:i+1]

i = 0

####print(halibut)

creel_table.append([date,ramp,catch_area,interviews,anglers,chinook_pa,chinook,coho,chum,pink,sockeye,lingcod,halibut])

if(results.find(‘<caption>’)>-1):

do = ‘nothing’

####print(str(len(results))+’ characters remaining to process’)

else:

####print(‘Process complete’)

run = False

return(creel_table)

i = 0

while(i<len(creel_table)):

####print(creel_table[i])

do = ‘nothing’

i=i+1

def remove_trailing_spaces(string):

####print(‘sring start ‘+string+’.’)

i = len(string) – 1

while(i>0):#remove ending spaces

if(string[i] == ‘ ‘):

i=i-1

else:

string = string[0:i+1]

i = 0

####print(‘string end ‘+string+’.’)

return(string)

def export_as_csv(filename,data):

##print(‘..exporting…’)

with open(filename, ‘w’, newline=”) as csvfile2:

spamwriter = csv.writer(csvfile2, delimiter=’,’,

quotechar='”‘, quoting=csv.QUOTE_MINIMAL)

for row in data:

spamwriter.writerow(row)

def add_fish_from_csv_url(url,dataname,creel_table):

#USE_CACHE = False #dont want to access WDFW data too much, use previously downloaded files most of the time

##print(‘Current number of records: ‘+str(len(creel_table)))

if(USE_CACHE == False):

#print(‘Downloading ‘+dataname+’ from ‘+url)

r = requests.get(url)

with open(str(dataname+’.csv’), ‘wb’) as f:

f.write(r.content)

#today = date.today()

##print(‘TODAY’)

#today_str = today.strftime(“%m/%d/%Y”)

now = datetime.now()

date_time = now.strftime(“%m/%d/%Y %I:%M %p”)

global last_grab_of_archived_years

last_grab_of_archived_years = date_time

#print(last_grab_of_archived_years)

else:

#print(‘Retreiving previously downloaded data from ‘+dataname+’.csv’)

do = ‘nothing’ #

with open(str(dataname+’.csv’)) as csvfile:

readCSV = csv.reader(csvfile, delimiter=’,’)

i=0

for row in readCSV:

if(i != 0): #ignore header

date2 = remove_trailing_spaces(row[0])

ramp = remove_trailing_spaces(row[1])

catch_area = remove_trailing_spaces(row[2])

interviews = remove_commas(remove_trailing_spaces(row[3]))

anglers = remove_commas(remove_trailing_spaces(row[4]))

chinook_pa = remove_trailing_spaces(row[5])

chinook = remove_commas(remove_trailing_spaces(row[6]))

coho = remove_commas(remove_trailing_spaces(row[7]))

chum = remove_commas(remove_trailing_spaces(row[8]))

pink = remove_commas(remove_trailing_spaces(row[9]))

sockeye = remove_commas(remove_trailing_spaces(row[10]))

lingcod = remove_commas(remove_trailing_spaces(row[11]))

halibut = remove_commas(remove_trailing_spaces(row[12]))

###print(‘ ‘ + row)

creel_table.append([date2,ramp,catch_area,interviews,anglers,chinook_pa,chinook,coho,chum,pink,sockeye,lingcod,halibut])

i+=1

##print(‘New number of records: ‘+str(len(creel_table)))

##print(‘________’)

return(creel_table)

URLYearMinus1 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?page&_format=csv’

URLYearMinus2 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=2&ramp=&catch_area=&page&_format=csv’

URLYearMinus3 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=3&ramp=&catch_area=&page&_format=csv’

URLYearMinus4 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=4&ramp=&catch_area=&page&_format=csv’

URLYearMinus5 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=5&ramp=&catch_area=&page&_format=csv’

URLYearMinus6 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=6&ramp=&catch_area=&page&_format=csv’

URLYearMinus7 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=7&ramp=&catch_area=&page&_format=csv’

URLYearMinus8 = ‘https://wdfw.wa.gov/fishing/reports/creel/puget-annual/export?sample_date=8&ramp=&catch_area=&page&_format=csv’

def extract_ALL_fish_reports(creel_table):

run_count = 0

page_valid = True

while(run_count < 6 and page_valid == True):

#URL = ‘https://wdfw.wa.gov/fishing/reports/creel/puget?ramp=&sample_date=3&catch_area=’

#URL = ‘https://wdfw.wa.gov/fishing/reports/creel/puget?ramp=&sample_date=3’

#URL = ‘https://wdfw.wa.gov/fishing/reports/creel/puget?ramp=&sample_date=3&catch_area=&page=1’

URL = ”

if(run_count == 0):

URL = ‘https://wdfw.wa.gov/fishing/reports/creel/puget?ramp=&sample_date=3&catch_area=’

else:

URL = ‘https://wdfw.wa.gov/fishing/reports/creel/puget?ramp=&sample_date=3&catch_area=&page=’+str(run_count)

r = requests.get(URL)

data = r.text

if(data.find(‘No results found’)>-1):

page_valid = False

#print(page_valid)

if(page_valid == True):

soup = bs4.BeautifulSoup(data, ‘lxml’)

results = soup.find(‘tbody’)

results = results.find_all(‘tr’)

results = soup.findAll(“div”, {“class”: “view-content”})

results = str(results)

#####print(results)

creel_table = extract_fish_reports(results,creel_table)

run_count=run_count+1

return(creel_table)

update_USE_CACHE()

creel_table = extract_ALL_fish_reports(creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus1,’CREELYearMinus1′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus2,’CREELYearMinus2′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus3,’CREELYearMinus3′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus4,’CREELYearMinus4′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus5,’CREELYearMinus5′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus6,’CREELYearMinus6′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus7,’CREELYearMinus7′,creel_table)

creel_table = add_fish_from_csv_url(URLYearMinus8 ,’CREELYearMinus8′,creel_table)

###print the table

i = 0

print_end = i + 10

print(‘printing the first ‘+str(print_end -i)+’ rows of creel_table’)

while(i<print_end):

print(creel_table[i])

do = ‘nothing’

i=i+1

area_13_coho = []

area_13_coho.append([‘Month’,’Jan’,’Feb’,’Mar’,’Apr’,’May’,’Jun’,’Jul’,’Aug’,’Sep’,’Oct’,’Nov’,’Dec’])

area_13_coho.append([‘Anglers’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Chinook’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Chinook/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Coho’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Coho/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Chum’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Chum/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Pink’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Pink/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Sockeye’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Sockeye/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Lingcod’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Lingcod/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Halibut’,0,0,0,0,0,0,0,0,0,0,0,0])

area_13_coho.append([‘Halibut/Angler’,0,0,0,0,0,0,0,0,0,0,0,0])

#zone_to_check = ‘Area 13, South Puget Sound’

area_array = area_13_coho

i=1#skip header

while(i<len(creel_table)):

if(1==1):

#if(creel_table[i][2] == zone_to_check): #might do this later

j = 1 #skip label

while(j<len(area_array[0])):

if(area_array[0][j] == creel_table[i][0][0:3]): ##corrct month

#anglers

area_array[1][j] = area_array[1][j] + float(remove_commas(creel_table[i][4]))

#chinook

fish_row = 2

fish_column = 6

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

#coho

fish_row = 4

fish_column = 7

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

#chum

fish_row = 6

fish_column = 8

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

#pink

fish_row = 8

fish_column = 9

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

#sockeye

fish_row = 10

fish_column = 10

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

#lingcod

fish_row = 12

fish_column = 11

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

#halibut

fish_row = 14

fish_column = 12

area_array[fish_row][j] = area_array[fish_row][j] + float(remove_commas(creel_table[i][fish_column]))

if(area_array[1][j] != 0):

area_array[fish_row+1][j] = area_array[fish_row][j]/area_array[1][j]

####print(creel_table[i][0][0:2])

j=j+1

i=i+1

export_as_csv(“test_data”,area_array)

export_as_csv(“all_creel_data.csv”,creel_table)

#save our data for debugging, etc

with open(“creel_export.csv”,”w”,newline=”) as csvfile:

spamwriter = csv.writer(csvfile, delimiter=’,’)

i = 0

while(i<len(area_13_coho)):

row = area_13_coho[i]

spamwriter.writerow(row)

i=i+1

add_save_tag()

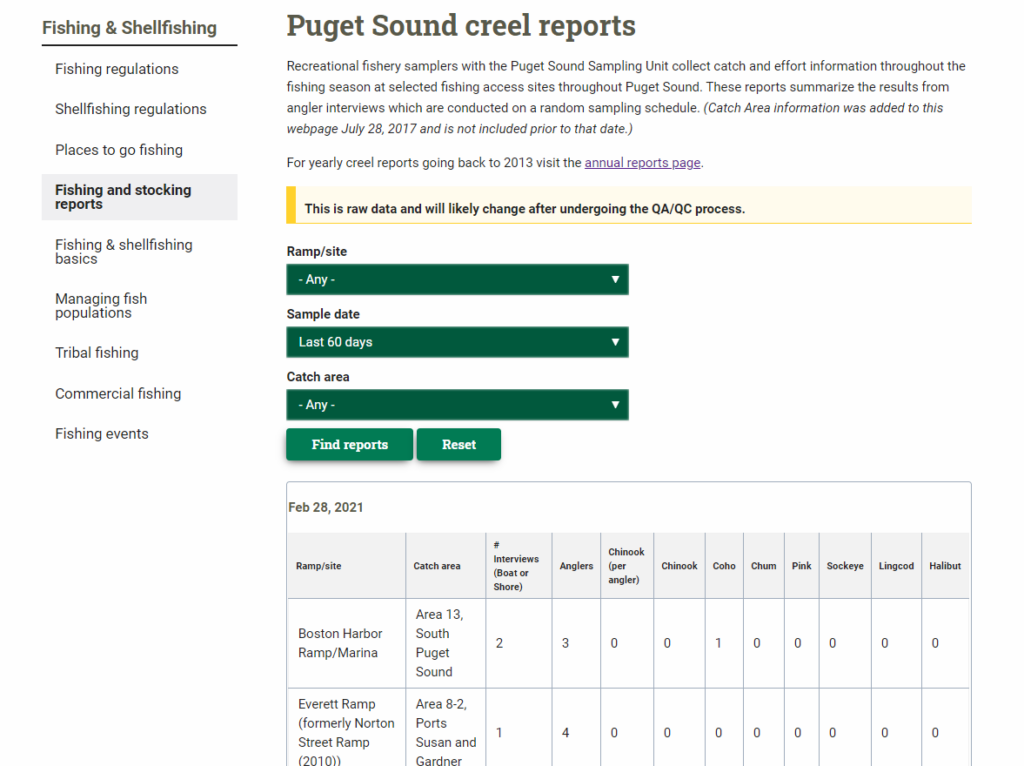

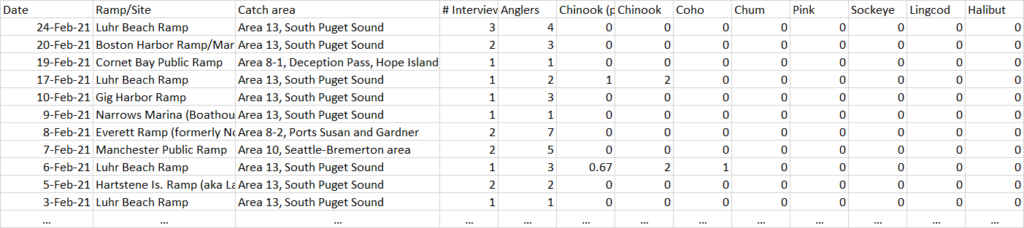

(B) The web scraping script uses BeautifulSoup and other things to grab data from the WDFW website (A). The output is saves as csvs.

import flask

from flask import request, jsonify

from flask_cors import CORS

import csv

import json

import datetime

NUMERICAL_ROWS = [3,4,6,7,8,9,10,11,12] #the rows of the CSV with actual numbers that need added

app = flask.Flask(__name__)

CORS(app)

app.config[“DEBUG”] = True

all_creel_data = []

def in_list(item,a_list):

in_list = False

i = 0

while(i<len(a_list)):

if(a_list[i] == item):

in_list = True

i=i+1

return(in_list)

def add_data_to_row(data,row):

i = 0

while(i<len(row)):

if(in_list(i,NUMERICAL_ROWS) == True):

row[i] = float(row[i]) + float(data[i])

i=i+1

return(row)

def load_by_catch_area():

#”all_creel_data.csv”,creel_table) ##Date Ramp/Site Catch area # Interviews Anglers Chinook (per angler) Chinook Coho Chum Pink Sockeye Lingcod Halibut

all_creel_data = []

with open(‘all_creel_data.csv’, newline=”) as csvfile:

spamreader = csv.reader(csvfile, delimiter=’,’, quotechar='”‘)

for row in spamreader:

all_creel_data.append(row)

#build the ID list

IDs = []

i = 1 #skip header

while(i<len(all_creel_data)):

potential_ID_index = 2

potential_ID = all_creel_data[i][potential_ID_index]

if(in_list(potential_ID,IDs) == False):

IDs.append(potential_ID)

i=i+1

#print(IDs)

#build an array based on the IDs

creel_return = []

i = 0

while(i<len(IDs)):

new_row = []

j=0

row_length = len(new_row)

while(row_length < len(all_creel_data[0])):

if(j == potential_ID_index):

new_row.append(IDs[i])

elif(in_list(j,NUMERICAL_ROWS)):

new_row.append(0)

else:

new_row.append(‘NA’)

j=j+1

row_length = len(new_row)

creel_return.append(new_row)

i=i+1

#print(creel_return)

#fill that array with the data

i = 1#skip header

while(i<len(all_creel_data)):

j = 0

while(j<len(creel_return)):

if(creel_return[j][potential_ID_index] == all_creel_data[i][potential_ID_index]):

creel_return[j] = add_data_to_row(all_creel_data[i],creel_return[j])

j=len(creel_return)#break

j=j+1

i=i+1

creel_return.sort()

#print(creel_return)

#convert to json

return_json = {}

i=0

while(i<len(creel_return)):

temp_json = {}

j=0

while(j<len(all_creel_data[0])):

if(j != potential_ID_index):

if(creel_return[i][j] != ‘NA’):

temp_json[all_creel_data[0][j]]=creel_return[i][j]

j=j+1

return_json[creel_return[i][potential_ID_index]]=temp_json

i=i+1

return(return_json)

def load_by_year():

#”all_creel_data.csv”,creel_table) ##Date Ramp/Site Catch area # Interviews Anglers Chinook (per angler) Chinook Coho Chum Pink Sockeye Lingcod Halibut

all_creel_data = []

with open(‘all_creel_data.csv’, newline=”) as csvfile:

spamreader = csv.reader(csvfile, delimiter=’,’, quotechar='”‘)

for row in spamreader:

all_creel_data.append(row)

#add datetime dates to the data

years =[]

i=0

while(i<len(all_creel_data)):

if(i==0):

all_creel_data[0].append(‘Datetime’)

else:

datetime_str = all_creel_data[i][0]

#########print(datetime_str+’ datetime_str’)

datetime_obj = datetime.datetime.strptime(datetime_str, ‘%b %d, %Y’)

#########print(str(datetime_obj)+’ datetime_obj’)

##print(len(all_creel_data[i]))

all_creel_data[i].append(datetime_obj)

##print(all_creel_data[i][13])

year = datetime_str[len(datetime_str)-4:len(datetime_str)]

if(in_list(year,years)==False):

years.append(year)

i=i+1

years.sort()

######print(years)

#build the ID list

IDs = []

i = 1 #skip header

while(i<len(all_creel_data)):

potential_ID_index = 2

potential_ID = all_creel_data[i][potential_ID_index]

if(in_list(potential_ID,IDs) == False):

IDs.append(potential_ID)

i=i+1

##########print(IDs)

#build the data object

##############################

fish = {}

j=0

while(j<len(all_creel_data[0])):

if(in_list(j,NUMERICAL_ROWS) == True):

fish[all_creel_data[0][j]]=0

j=j+1

###print(fish)

months = [‘Jan’,’Feb’,’Mar’,’Apr’,’May’,’Jun’,’Jul’,’Aug’,’Sep’,’Oct’,’Nov’,’Dec’]

months_dict = {}

i = 0

while(i<len(months)):

months_dict[months[i]]=fish.copy()

i=i+1

###print(months_dict)

#convert to json

year_json = {}

i=0

while(i<len(years)):

year_json[years[i]]=months_dict.copy()

i=i+1

year_json[‘5 year average’]=months_dict.copy()

###print(year_json)

return_json = {}

i=0

while(i<len(IDs)):

return_json[IDs[i]]=year_json.copy()

i=i+1

return_json[‘All’]=year_json.copy()#add in an everyhting combined option

#print(return_json)

#having some issues with linking. Turn into a string then back to remove any links

temp = json.dumps(return_json)

return_json = json.loads(temp)

#fill the json with data

i=1#skip header

while(i<len(all_creel_data)):

j=0

key = all_creel_data[i][potential_ID_index]

#year = datetime_str[len(all_creel_data[i][0])-4:len(all_creel_data[i][0])]

datetime_obj = all_creel_data[i][13]

year = datetime_obj.strftime(“%Y”)

month = datetime_obj.strftime(“%b”)

today = datetime.datetime.now()

current_year=today.strftime(“%Y”)

#print(year)

#print(month)

while(j<len(all_creel_data[0])):

if(in_list(j,NUMERICAL_ROWS)==True):

fish_name = all_creel_data[0][j]

fish_number = all_creel_data[i][j]

return_json[key][year][month][fish_name] = float(return_json[key][year][month][fish_name])+float(fish_number)

return_json[‘All’][year][month][fish_name] = float(return_json[‘All’][year][month][fish_name])+float(fish_number)

#add to 5 year average

if(year != current_year):

if(int(current_year)-int(year)<7):#dont include current year in avg

return_json[key][‘5 year average’][month][fish_name] = float(return_json[key][‘5 year average’][month][fish_name])+float(fish_number)

return_json[‘All’][‘5 year average’][month][fish_name] = float(return_json[‘All’][‘5 year average’][month][fish_name])+float(fish_number)

j=j+1

i=i+1

#divide the 5 year average parts of the json by 5 to get the avg

areas = list(return_json.keys())

fish = list(return_json[‘All’][‘5 year average’][‘Jan’].keys())

months = list(return_json[‘All’][‘5 year average’].keys())

i = 0

while(i<len(areas)):

j = 0

while(j<len(fish)):

k = 0

while(k<len(months)):

return_json[areas[i]][‘5 year average’][months[k]][fish[j]] = float(return_json[areas[i]][‘5 year average’][months[k]][fish[j]])/5

k=k+1

j=j+1

i=i+1

print(return_json[‘All’][‘5 year average’])

#return_json[“Area 10, Seattle-Bremerton area”][‘2013’][‘Apr’][‘Anglers’] = 100

return(return_json)

def load_dataset():

#”all_creel_data.csv”,creel_table)

all_creel_data = []

with open(‘all_creel_data.csv’, newline=”) as csvfile:

spamreader = csv.reader(csvfile, delimiter=’,’, quotechar='”‘)

for row in spamreader:

all_creel_data.append(row)

#create JSON by creating a string first

json_array = []

creel_json = ”

i = 1 #skip csv header

while(i<len(all_creel_data)):

current_creel = ”

#make up ids for each row

current_creel = current_creel + “{”

current_creel = current_creel + ”'””’+”id”+”'”:”’

current_creel = current_creel + str(i)+’,’

#loop through the columns

j = 0

while(j < len(all_creel_data[0])):

current_creel = current_creel + ”'””’+all_creel_data[0][j]+”'”:”’#column name

current_creel = current_creel + ”'””’+all_creel_data[i][j] +”'””’

if(j+1<len(all_creel_data[0])):

current_creel = current_creel +’,’

j = j + 1

current_creel = current_creel + “}”

json_array.append(current_creel)

#print(current_creel)

i=i+1

creel_json = json.loads(json.dumps(json_array))

return(creel_json)

@app.route(‘/’, methods=[‘GET’])

def home():

return “<h1>Prototype</h1>”

@app.route(‘/api/v1/creel/by_year’, methods=[‘GET’]) #http://127.0.0.1:5000/api/v1/creel/all

#@crossdomain(origin=’*’)

def api_catch_year():

return(load_by_year())

app.run(ssl_context=’adhoc’,host=”0.0.0.0″, port=”8080″)

#app.run(host=”0.0.0.0″, port=”8080″)

#app.run(host=”0.0.0.0″, port=”33″)

(D) The flask app in python that serves a JSON computed from the csv.

<!DOCTYPE html>

<html>

<head>

<title>Alarming Hornet</title>

<link rel=”icon” href=”https://davidhartline.com/alarminghornet/rainbow.png”>

<script src=”https://polyfill.io/v3/polyfill.min.js?features=default”></script>

…

<script src=”https://cdn.jsdelivr.net/npm/chart.js”></script>

<script src=”https://d3js.org/d3.v6.min.js”></script>

<style type=”text/css”>

…

</style>

<script>

var myChart

var json

var keys = []

var years = []

var labels

var color_count = 0 //increases every time a color is pulled for a chart

Chart.defaults.global.elements.line.fill = false;

var xhttp = new XMLHttpRequest();

var fish_options = [‘Chinook’];

var location_str = ‘All’;

var selected_color = ‘#7d1128’;

var unselected_color = ‘#c3b299’;

var button_text_color =’#ffffff’

var first_run = true;

xhttp.onreadystatechange = function () {

//////window.alert(‘onreadystatechange’)

//////window.alert(this.readyState)

if (this.readyState == 4 && this.status == 200) {

BuildChart();

json = JSON.parse(this.response);

//var json_str =JSON.stringify(json)

//JSON.stringify(json[Object.keys(json)[0]]);

keys = Object.keys(json);

//////window.alert(keys)

years = Object.keys(json[keys[0]])

//window.alert(years)

//months = Object.keys(json[keys[0]][years[0]])

labels = [‘Jan’,’Feb’,’Mar’,’Apr’,’May’,’Jun’,’Jul’,’Aug’,’Sep’,’Oct’,’Nov’,’Dec’]

update_dropdown();

//fish_options = Object.keys(json[‘All’][‘2020’][labels[0]]);

if(first_run == true){

load_chart_with_data();

first_run=false;

}

}

update_report_text();

};

xhttp.open(“GET”, “http://127.0.0.1:8080/api/v1/creel/by_year”, true);

xhttp.send();

function load_chart_with_data(location_str, fish_to_display){

//////window.alert(‘load_chart_with_data’)

clearchart()

//////window.alert(JSON.stringify(json[keys[0]][‘2013’]))

//////window.alert(JSON.stringify(json[keys[0]][years[0]][labels[0]][‘Anglers’]))

if(location_str == null){

location_str = ‘All’

}

if(fish_to_display == null){

fish_to_display = [“Chinook”]

}

location_str =location_str

//////window.alert(fish_to_display)

fish_display_index = 0;

while(fish_display_index < fish_to_display.length){

year_index = years.length-1;

//while(year_index<10){

year_str = years[year_index].toString();

var i = 0;

values = [];

label = ”;

//location_str = ‘All’;

fish_str = fish_to_display[fish_display_index]

while(i < labels.length){

//////window.alert(labels[i])

//values.push(JSON.stringify(json[labels[i]][‘Chinook’])/JSON.stringify(json[labels[i]][‘Anglers’]))

if(i==0){

label = ”//location_str.concat(‘ ‘)

label = label.concat(year_str)

if(year_str == “5 year average”){

label = ‘Average’

}

label = label.concat(‘ ‘)

label = label.concat(fish_str)

}

var Anglers = parseFloat(JSON.stringify(json[location_str][year_str][labels[i]][‘Anglers’]))

var fish = parseFloat(JSON.stringify(json[location_str][year_str][labels[i]][fish_str]))

values.push(fish/Anglers)

i++;

}

//////window.alert(values)

addDataset(values,label,getColor(fish_str));

year_index = years.length-2;

year_str = years[year_index].toString();

values = [];

i=0;

while(i < labels.length){

//////window.alert(labels[i])

//values.push(JSON.stringify(json[labels[i]][‘Chinook’])/JSON.stringify(json[labels[i]][‘Anglers’]))

if(i==0){

label = ”//location_str.concat(‘ ‘)

label = label.concat(year_str)

if(year_str == “5 year average”){

label = ‘Average’

}

label = label.concat(‘ ‘)

label = label.concat(fish_str)

}

var Anglers = parseFloat(JSON.stringify(json[location_str][year_str][labels[i]][‘Anglers’]))

var fish = parseFloat(JSON.stringify(json[location_str][year_str][labels[i]][fish_str]))

values.push(fish/Anglers)

i++;

}

//////window.alert(values)

addDataset(values,label,getColor(fish_str,’this_year’));

year_index++;

fish_display_index++;

}

//}

//new_values = []

//new_values.push(values)

// Pass in data and call the chart

//UpdateBuildChart(labels, new_values, “Chinook per Angler”);

};

function getColor(fish, other_info){

pseudo_count = color_count

color = ‘blue’

if(fish == ‘Chinook’){

color = ‘#FCB542’;

}else if(fish ==’Chum’){

color = ‘#A86234’;

}else if(fish==’Coho’){

color = ‘#5DF597’;

}else if(fish==’Halibut’){

color = ‘#A851A8’;

}else if(fish==’Lingcod’){

color = ‘#2A59DE’;

}else if(fish==’Pink’){

color = ‘#2A59DE’;

}else if(fish==’Sockeye’){

color = ‘#F50505’;

}

if(other_info != null){

//window.alert(color)

color_hex = color.substring(1,color.length);

R_hex =color_hex.substring(0,2);

G_hex =color_hex.substring(2,4);

B_hex =color_hex.substring(4,6);

//window.alert(R_hex)

//window.alert(G_hex)

//window.alert(B_hex)

R_hex = parseInt(parseInt(R_hex,16) – (parseInt(R_hex,16)/2))

G_hex = parseInt(parseInt(G_hex,16) – (parseInt(G_hex,16)/2))

B_hex = parseInt(parseInt(B_hex,16) – (parseInt(B_hex,16)/2))

//color_hex = parseInt(color_hex,16);

//color_hex = parseInt(color_hex – (parseInt(‘F50505’,16)))

color = ‘#’+R_hex.toString(16)+G_hex.toString(16)+B_hex.toString(16);

//window.alert(color)

}

color_count++;

return(color);

}

function clearchart() {

//myChart.destroy();

chart = myChart;

//////window.alert(myChart.data.datasets.length)

number_of_datasets = chart.data.datasets.length;

i=0;

while(i<number_of_datasets){

chart.config.data.datasets.pop();

i++;

}

// myChart.data.datasets.forEach((dataset) => {

// ////window.alert(String(dataset))

// dataset.data.pop();

// });

// myChart.update();

}

function addDataset(data,label,color) {

chart = myChart

label = label

var newDataset = {

label: label,

backgroundColor: color,

borderColor: color,

data: data,

fill: false

};

if(location_str==’All’){

chart.options.title.text = location_str+’ Puget Sound Areas’

}else{

chart.options.title.text = location_str

}

chart.config.data.datasets.push(newDataset);

chart.update();

}

function BuildChart() {

//window.alert(‘test’)

if(first_run == true){

myChart = new Chart(document.getElementById(“line-chart”), {

type: ‘line’,

data: {

labels: [‘January’, ‘February’, ‘March’, ‘April’, ‘May’, ‘June’, ‘July’,’August’,’September’,’October’,’November’,’December’],

datasets: [{

// label: ‘My First dataset’,

// backgroundColor: “red”,//window.chartColors.blue,

// borderColor: “red”,//window.chartColors.blue,

// data: [

// ],

// fill: false,

}]

},

options: {

responsive: true,

title: {

display: true,

text: ‘loading…’

}

}

});

}

}

function setColor(){

//deslect all fish colors, then put them back where active

document.getElementById(‘All Fish’).style.background=unselected_color;

document.getElementById(‘All Fish’).style.color=button_text_color;

document.getElementById(‘All Salmon’).style.background=unselected_color;

document.getElementById(‘All Salmon’).style.color=button_text_color;

document.getElementById(‘Chinook’).style.background=unselected_color;

document.getElementById(‘Chinook’).style.color=button_text_color;

document.getElementById(‘Chum’).style.background=unselected_color;

document.getElementById(‘Chum’).style.color=button_text_color;

document.getElementById(‘Coho’).style.background=unselected_color;

document.getElementById(‘Coho’).style.color=button_text_color;

document.getElementById(‘Halibut’).style.background=unselected_color;

document.getElementById(‘Halibut’).style.color=button_text_color;

document.getElementById(‘Lingcod’).style.background=unselected_color;

document.getElementById(‘Lingcod’).style.color=button_text_color;

document.getElementById(‘Pink’).style.background=unselected_color;

document.getElementById(‘Pink’).style.color=button_text_color;

document.getElementById(‘Sockeye’).style.background=unselected_color;

document.getElementById(‘Sockeye’).style.color=button_text_color;

i=0;

salmon_count = 0;

fish_count = 0;

while(i<fish_options.length){

if(fish_options[i]==’Chinook’){

document.getElementById(‘Chinook’).style.background=selected_color;

salmon_count++;

fish_count++;

}if(fish_options[i]==’Chum’){

document.getElementById(‘Chum’).style.background=selected_color;

salmon_count++;

fish_count++;

}if(fish_options[i]==’Coho’){

document.getElementById(‘Coho’).style.background=selected_color;

salmon_count++;

fish_count++;

}if(fish_options[i]==’Halibut’){

document.getElementById(‘Halibut’).style.background=selected_color;

fish_count++;

}if(fish_options[i]==’Lingcod’){

document.getElementById(‘Lingcod’).style.background=selected_color;

fish_count++;

}if(fish_options[i]==’Pink’){

document.getElementById(‘Pink’).style.background=selected_color;

fish_count++;

salmon_count++;

}if(fish_options[i]==’Sockeye’){

document.getElementById(‘Sockeye’).style.background=selected_color;

fish_count++;

salmon_count++;

}

i++;

}

if(fish_count==7){

document.getElementById(‘All Fish’).style.background=selected_color;

}

if(salmon_count==5){

document.getElementById(‘All Salmon’).style.background=selected_color;

}

update_report_text();

if(first_run != true){

if(location_str==’All’){

chart.options.title.text = location_str+’ Puget Sound Areas’

}else{

chart.options.title.text = location_str

}

}

}

window.onload = function() {

//xhttp.open(“GET”, “http://127.0.0.1:5000/api/v1/creel/by_year”, true);

//xhttp.open(“GET”, “https://forbes400.herokuapp.com/api/forbes400?limit=10”, true);

//xhttp.send();

BuildChart();

update_dropdown();

//BuildChart();

setColor();

//setWidth();

//load_chart_with_data();

}

function update_dropdown(){

var select = document.getElementById(“selectNumber”);

var options = keys//[“1”, “2”, “3”, “4”, “5”];

for(var i = 0; i < options.length; i++) {

if(options[i]!=’All’&& options[i] != ‘N/A’ && options[i] != ‘Sinclair Inlet’ && options[i] != ‘Dungeness Bay’ && options[i] != ‘Commencement Bay’ && options[i] != ‘Quilicene, Dabob Bay’ && options[i] != ”){

var opt = options[i];

var el = document.createElement(“option”);

el.textContent = opt;

el.value = opt;

select.appendChild(el);

}

document.getElementById(“selectNumber”).onchange = function() {

//setActiveStyleSheet(this.value);

//////window.alert(this.value);

load_chart_with_data(this.value,fish_options);

location_str = this.value

setColor();

return false

};

}

}

function add_or_remove_fish(fish){

//check if it is already displayed, add if not there, remove if so

if(fish==’All Fish’){

fish_options = [“Chinook”,”Chum”,”Coho”,”Halibut”,”Lingcod”,”Pink”,”Sockeye”]

} else if (fish==’All Salmon’){

fish_options = [“Chinook”,”Chum”,”Coho”,”Pink”,”Sockeye”]

} else {

in_list = false;

i=0;

while(i<fish_options.length){

if(fish_options[i]==fish){

in_list = true;

i = fish_options.length;

}

i++;

}

if(in_list==false){

fish_options.push(fish);

} else {

i=0;

temp = [];

while(i<fish_options.length){

if(fish_options[i] != fish){

temp.push(fish_options[i]);

}

i++;

}

fish_options = temp

}

}

//////window.alert(fish_options);

load_chart_with_data(location_str, fish_options);

setColor();

}

function get_index(element, array){

i = 0;

position = -1;//for error

while(i<array.length){

if(array[i]==element){

position = i;

i=array.length;

}

i++;

}

return(position)

}

function swap(posA,posB,array){

i=0;

new_array=[];

while(i<array.length){

if(i== posA){

new_array.push(array[posB]);

}else if (i==posB){

new_array.push(array[posA]);

}else{

new_array.push(array[i]);

}

i++;

}

return(new_array);

}

function update_report_text(){

var header = ”

let output_array = []

output_array.push([‘Fish’,’Month’,’Catch compared to that month in previous years’,’Catch compared to the average high for a year’,’Details’])

////window.alert(output_array[0][0])

if(first_run!=true){

var new_output_array_line = []

if(location_str==’All’){

header+=’For all Puget Sound areas combined’;

}else{

header+=’For the Puget Sound fishing area “‘

temp = location_str.concat(”)

header+=temp;

header+='”‘

}

//get the latest info for the area

var newest_creel = ”;

years = Object.keys(json[location_str]);

newest_year = 0;

i=0;

while(i<years.length){

if(parseInt(years[i])>newest_year){

newest_year = parseInt(years[i]);

}

i++;

}

months = [‘Jan’,’Feb’,’Mar’,’Apr’,’May’,’Jun’,’Jul’,’Aug’,’Sep’,’Oct’,’Nov’,’Dec’]

var newest_month_index = 0;

i=0;

while(i<months.length){

if(parseInt(json[location_str][newest_year.toString()][months[i]][‘Anglers’])>0){

newest_month_index = i;

}

i++;

}

angler_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][‘Anglers’]);

average_angler_count=JSON.stringify(json[location_str][‘5 year average’][months[newest_month_index]][‘Anglers’]);

fish_index = 0;

//////window.alert(fish_options.length);

while(fish_index<fish_options.length){

var details_str = ”

//get the average peak for a year

i = 0;

high_month_index = 0;

high_monthly_catch_per_angler = 0.0;

while(i<months.length){

monthly_catch_count = JSON.stringify(json[location_str][‘5 year average’][months[i]][fish_options[fish_index]])

monthly_angler_count = JSON.stringify(json[location_str][‘5 year average’][months[i]][‘Anglers’]);

monthly_catch_per_angler = parseFloat(monthly_catch_count)/parseFloat(monthly_angler_count)

if(monthly_catch_per_angler > high_monthly_catch_per_angler){

high_monthly_catch_per_angler = monthly_catch_per_angler

high_month_index = i;

}

i++;

}

////window.alert(high_monthly_catch_per_angler)

details_str+=’So far this ‘+months[newest_month_index]+’, WDFW has interviewed ‘

angler_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][‘Anglers’]);

details_str+=angler_count+’ anglers and found ‘

fish_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][fish_options[fish_index]])

////window.alert(fish_count)

angler_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][‘Anglers’]);

details_str+=’ ‘

details_str+=fish_count

fish_type = fish_options[fish_index]

new_output_array_line.push(‘<p style=”color:’+getColor(fish_type,’label’)+'”>’+fish_type+'</p>’)

new_output_array_line.push(‘<p style=”color:’+getColor(fish_type,’label’)+'”>’+months[newest_month_index]+'</p>’)

details_str+=’ ‘

details_str+=fish_type+”

fish_per_angler = parseFloat(fish_count)/parseFloat(angler_count)

catch_compared_to_high = ”

compared_to_peak = (((fish_per_angler-high_monthly_catch_per_angler)/high_monthly_catch_per_angler)*100)

if(compared_to_peak>110){

catch_compared_to_high+='<p style=”color:green”>Higher (‘+(Math.round(compared_to_peak * 100)/100)+’%)’

}else if (compared_to_peak>=(-10.0)){

catch_compared_to_high+='<p style=”color:gray”>About the same (‘+(Math.round(compared_to_peak * 100)/100)+’%)’

}else if (compared_to_peak<(-10.0)){

catch_compared_to_high+='<p style=”color:red”>Lower (‘+(Math.round(compared_to_peak * 100)/100)+’%)’

}else{

catch_compared_to_high+='<p style=”color:gray”>-‘

}

catch_compared_to_high+='</p>’

insert_str =”

if(compared_to_peak>100){

insert_str+=’ This ‘ +months[newest_month_index]+’ was ‘+(Math.round(compared_to_peak * 10)/10)+’% higher than that.’

}else if(compared_to_peak<=(0.0)){

insert_str+=’ This ‘ +months[newest_month_index]+’ was ‘+(Math.round(compared_to_peak * 10)/10)*-1+’% lower than that.’

}

details_str+=’, or ‘+(Math.round(fish_per_angler * 1000)/1000)+’ ‘+fish_type+’/angler.’

average_fish_count = JSON.stringify(json[location_str][‘5 year average’][months[newest_month_index]][fish_options[fish_index]])

average_angler_count=JSON.stringify(json[location_str][‘5 year average’][months[newest_month_index]][‘Anglers’]);

average_fish_per_angler = parseFloat(average_fish_count)/parseFloat(average_angler_count)

improvement = (((fish_per_angler-average_fish_per_angler)/average_fish_per_angler)*100)

if(improvement >= 0.0){

details_str+='<br><br>That is ‘+(Math.round(improvement * 10)/10)

details_str+=’% better than ‘

details_str+=’the average for ‘+months[newest_month_index]+’. ‘

}else if (improvement < 0.0){

details_str+='<br><br>That is ‘+(Math.round(improvement * 10)/10)*-1

details_str+=’% worse than ‘

details_str+=’the average for ‘+months[newest_month_index]+’. ‘

}else{

details_str+='<br><br>’

}

catch_str = ”

if(improvement >= 5.0){

catch_str += ‘<p style=”color:green”>Up (+’

catch_str +=(Math.round(improvement * 10)/10)

catch_str += ‘%)’

}else if (improvement >= -5.0){

catch_str += ‘Aprox. Neutral (‘

catch_str += (Math.round(improvement * 10)/10)

catch_str += ‘%)’

}else if (improvement < -5.0 ){

catch_str += ‘<p style=”color:red”>Down (‘

catch_str += (Math.round(improvement * 10)/10)

catch_str += ‘%)’

} else {

catch_str += ‘<p style=”color:gray”>-‘

}

new_output_array_line.push(catch_str+'</p>’)

new_output_array_line.push(catch_compared_to_high)

details_str+=’In the previous five years, ‘

details_str+=average_angler_count

details_str+=’ anglers/year were interviewed and ‘

details_str+=average_fish_count

details_str+=’ ‘

details_str+=fish_type

details_str+=’ found, equating to ‘

details_str+=(Math.round(average_fish_per_angler * 1000)/1000)

details_str+=’ ‘

details_str+=fish_type

details_str+=’/angler. ‘

details_str+='<br><br>The typical highest catch for a year for ‘+fish_options[fish_index]+’ is in ‘+months[high_month_index]+’ at ‘+(Math.round(high_monthly_catch_per_angler * 10000)/10000)+’ ‘+fish_options[fish_index]+’/angler. ‘+insert_str

new_output_array_line.push(details_str)

output_array.push(new_output_array_line)

new_output_array_line=[]

fish_index++;

}

///go through the fish again, but for last month

fish_index = 0;

if (newest_month_index == 0){

newest_month_index = 11;

} else {

newest_month_index–;

}

//////window.alert(fish_options.length);

while(fish_index<fish_options.length){

var details_str = ”

//get the average peak for a year

i = 0;

high_month_index = 0;

high_monthly_catch_per_angler = 0.0;

while(i<months.length){

monthly_catch_count = JSON.stringify(json[location_str][‘5 year average’][months[i]][fish_options[fish_index]])

monthly_angler_count = JSON.stringify(json[location_str][‘5 year average’][months[i]][‘Anglers’]);

monthly_catch_per_angler = parseFloat(monthly_catch_count)/parseFloat(monthly_angler_count)

if(monthly_catch_per_angler > high_monthly_catch_per_angler){

high_monthly_catch_per_angler = monthly_catch_per_angler

high_month_index = i;

}

i++;

}

////window.alert(high_monthly_catch_per_angler)

details_str+=’This ‘+months[newest_month_index]+’, WDFW interviewed ‘

angler_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][‘Anglers’]);

details_str+=angler_count+’ anglers and found ‘

fish_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][fish_options[fish_index]])

////window.alert(fish_count)

angler_count = JSON.stringify(json[location_str][newest_year.toString()][months[newest_month_index]][‘Anglers’]);

details_str+=’ ‘

details_str+=fish_count

fish_type = fish_options[fish_index]

new_output_array_line.push(‘<p style=”color:’+getColor(fish_type,’label’)+'”>’+fish_type+'</p>’)

new_output_array_line.push(‘<p style=”color:’+getColor(fish_type,’label’)+'”>’+months[newest_month_index]+'</p>’)

details_str+=’ ‘

details_str+=fish_type+”

fish_per_angler = parseFloat(fish_count)/parseFloat(angler_count)

catch_compared_to_high = ”

compared_to_peak = (((fish_per_angler-high_monthly_catch_per_angler)/high_monthly_catch_per_angler)*100)

if(compared_to_peak>110){

catch_compared_to_high+='<p style=”color:green”>Higher (‘+(Math.round(compared_to_peak * 100)/100)+’%)’

}else if (compared_to_peak>=(-10.0)){

catch_compared_to_high+='<p style=”color:gray”>About the same (‘+(Math.round(compared_to_peak * 100)/100)+’%)’

}else if (compared_to_peak<(-10.0)){

catch_compared_to_high+='<p style=”color:red”>Lower (‘+(Math.round(compared_to_peak * 100)/100)+’%)’

}else{

catch_compared_to_high+='<p style=”color:gray”>-‘

}

catch_compared_to_high+='</p>’

insert_str =”

if(compared_to_peak>100){

insert_str+=’ This ‘ +months[newest_month_index]+’ was ‘+(Math.round(compared_to_peak * 10)/10)+’% higher than that.’

}else if(compared_to_peak<=(0.0)){

insert_str+=’ This ‘ +months[newest_month_index]+’ was ‘+(Math.round(compared_to_peak * 10)/10)*-1+’% lower than that.’

}

details_str+=’, or ‘+(Math.round(fish_per_angler * 1000)/1000)+’ ‘+fish_type+’/angler.’

average_fish_count = JSON.stringify(json[location_str][‘5 year average’][months[newest_month_index]][fish_options[fish_index]])

average_angler_count=JSON.stringify(json[location_str][‘5 year average’][months[newest_month_index]][‘Anglers’]);

average_fish_per_angler = parseFloat(average_fish_count)/parseFloat(average_angler_count)

improvement = (((fish_per_angler-average_fish_per_angler)/average_fish_per_angler)*100)

if(improvement >= 0.0){

details_str+='<br><br>That is ‘+(Math.round(improvement * 10)/10)

details_str+=’% better than ‘

details_str+=’the average for ‘+months[newest_month_index]+’.’

}else if (improvement < 0.0){

details_str+='<br><br>That is ‘+(Math.round(improvement * 10)/10)*-1

details_str+=’% worse than ‘

details_str+=’the average for ‘+months[newest_month_index]+’.’

}else{

details_str+='<br><br>’

}

catch_str = ”

if(improvement >= 5.0){

catch_str += ‘<p style=”color:green”>Up (+’

catch_str +=(Math.round(improvement * 10)/10)

catch_str += ‘%)’

}else if (improvement >= -5.0){

catch_str += ‘Aprox. Neutral (‘

catch_str += (Math.round(improvement * 10)/10)

catch_str += ‘%)’

}else if (improvement < -5.0 ){

catch_str += ‘<p style=”color:red”>Down (‘

catch_str += (Math.round(improvement * 10)/10)

catch_str += ‘%)’

} else {

catch_str += ‘<p style=”color:gray”>-‘

}

new_output_array_line.push(catch_str+'</p>’)

new_output_array_line.push(catch_compared_to_high)

details_str+=’In the previous five years, ‘

details_str+=average_angler_count

details_str+=’ anglers/year were interviewed and ‘

details_str+=average_fish_count

details_str+=’ ‘

details_str+=fish_type

details_str+=’ found, equating to ‘

details_str+=(Math.round(average_fish_per_angler * 1000)/1000)

details_str+=’ ‘

details_str+=fish_type

details_str+=’/angler. ‘

details_str+='<br><br>The typical highest catch for a year for ‘+fish_options[fish_index]+’ is in ‘+months[high_month_index]+’ at ‘+(Math.round(high_monthly_catch_per_angler * 10000)/10000)+’ ‘+fish_options[fish_index]+’/angler. ‘+insert_str

new_output_array_line.push(details_str)

output_array.push(new_output_array_line)

new_output_array_line=[]

fish_index++;

}

}

if(first_run==false){

////window.alert(output_array)

newtable =”

newtable+='<table id=”fish_table” style=”width:100%;text-align:center;”>’

newtable+='<tr>’

newtable+='<td colspan=”5″ style=”background-color:#7d1128;color:white”>’

newtable+='<font size=”4″>’+header+'</font>’

newtable+='</td>’

newtable+='</tr>’

newtable+='<tr>’

newtable+='<td style=”background-color:#c3b299;color:white”>’+output_array[0][0]+'</td>’//newtable+='<th>Fish</th>’

newtable+='<td style=”background-color:#c3b299;color:white”>’+output_array[0][1]+'</td>’//newtable+='<th>Month</th>’

newtable+='<td style=”background-color:#c3b299;color:white”>’+output_array[0][2]+'</td>’//newtable+='<th>Catch</th>’

newtable+='<td style=”background-color:#c3b299;color:white”>’+output_array[0][3]+'</td>’//newtable+='<th>Details</th>’

newtable+='<td style=”background-color:#c3b299;color:white”>’+output_array[0][4]+'</td>’

newtable+='</tr>’

i=1;//skip header

while(i<output_array.length){

newtable+='<tr>’

newtable+='<td style=”vertical-align: text-top;background-color:#f0ece5;”>’+output_array[i][0]+'</td>’//'<td>Chinook</td>’

newtable+='<td style=”vertical-align: text-top;background-color:#f0ece5;”>’+output_array[i][1]+'</td>’//'<td>Feb</td>’

newtable+='<td style=”vertical-align: text-top;background-color:#f0ece5;”>’+output_array[i][2]+'</td>’//'<td>Down</td>’

newtable+='<td style=”vertical-align: text-top;background-color:#f0ece5;”>’+output_array[i][3]+'</td>’//'<td>So far this Feb, WDFW has interviewed 29 anglers and found 4 Chinook. That 0.1379 Chinook/angler is 37.7% worse than the average for Feb. In the previous five years, 2941.2 anglers/year were interviewed and 651.4 Chinook found, equating to 0.2215 Chinook/angler.</td>’

newtable+='<td style=”vertical-align: text-top;background-color:#f0ece5;”><p style=”text-align:left;”>’+output_array[i][4]+'</td>’

newtable+='</tr>’

i++;

}

newtable+='</p></table>’

document.getElementById(‘fish_table’).innerHTML = newtable;

////window.alert(newtable)

}

}

</script>

</head>

<body>

<header>

<h1 >Alarming Hornet</h1>

<h2>A place to host some web projects</h2>

</header>

<div class=”wrap” style=”background-color: #c3b299 ;padding: 1px;”>

<p> <a href=”https://alarminghornet.com/stocking-report-map/” style=”margin-left: 85px;color: white;text-decoration: none;font-family: ‘Lato’, sans-serif”>INTERACTIVE STOCKING REPORTS</a></p>

</div>

<body>

<div id=”floating-panel” style=”width:90%;height:20%”>

<text id=’heading’>Fish Select</text>

<button id=”Chinook” onclick=”add_or_remove_fish(‘Chinook’);”>Chinook</button>

<button id=”Chum” onclick=”add_or_remove_fish(‘Chum’);”>Chum</button>

<button id=”Coho” onclick=”add_or_remove_fish(‘Coho’);”>Coho</button>

<button id=”Halibut” onclick=”add_or_remove_fish(‘Halibut’);”>Halibut</button>

<button id=”Lingcod” onclick=”add_or_remove_fish(‘Lingcod’);”>Lingcod</button>

<button id=”Pink” onclick=”add_or_remove_fish(‘Pink’);”>Pink</button>

<button id=”Sockeye” onclick=”add_or_remove_fish(‘Sockeye’);”>Sockeye</button>

<button id=”All Fish” onclick=”add_or_remove_fish(‘All Fish’);”>All Fish</button>

<button id=”All Salmon” onclick=”add_or_remove_fish(‘All Salmon’);”>All Salmon</button>

<br><text id=’heading2′>Area Select</text>

<select id=”selectNumber”>

<option>All</option>

</select>

</div>

<p></p><p></p>

<div>

<canvas id=”line-chart” responsive=”true”></canvas>

</div>

<p id =”fish_table”>

<script>

</script>

</body>

</html>

(E) The HTML that grabs the JSON from the flask app and displays the charts.js graph.

I like how the website looks. Hopefully it will be useful to others. There are some areas that still need addressed with the current code;

- The chart breaks when the browser is resized. R refresh will get it working again.

- The JSON is way bigger then needed, it carries 2013-2021, plus and average, and I only use one year and an average. I could fine tune flask to only pass what is asked for. Maybe it doesn’t matter, it appears to be 690KB, smaller than an image.

- Pink salmon only run every other year. They will be here in 2021, and they were not here in 2020. They were here in 2019. The definition or use of “Five year average” for Pink Salmon needs addressed.

- I should add some explanatory/introductory text at the top.

- The output table is sorted by month. I think sorting by fish makes more sense. That was a weird sentence to write.

- And, after a test run with the python actually deployed in AWS:

- I need to get HTTPS going. This is a start.

- When pulling from AWS, instead of locally, the chart takes a while to load. I suspect it is not bandwidth but the low AWS tier I am not, as the flask app is doing a lot of computation and it takes the limited VM some time. I should move most of that computation to the beginning python script and make the flask python app mostly just serve the JSON.

Stay tuned.